Jillian C. York is the author of Silicon Values: The Future of Free Speech Under Surveillance Capitalism. The views expressed in this commentary belong solely to the author. Read more from As Equals. For information about how the series is funded and more, check out our FAQs.

(CNN)Facebook confirmed this week that, despite taking control of Afghanistan, it would continue to ban the Taliban from using its platform, citing the United States’ inclusion of the group on its Specially Designated Global Terrorists list, as well as the company’s own policy against “dangerous organizations.”

The Taliban swiftly responded by criticizing Facebook, a company that for many years has claimed to promote free expression, for violating the group’s right to free speech.Although it is imperative to point out that the Taliban does not respect international standards on human rights, including the right to free expression, their cynical jab at the social media giant exposes a fundamental hypocrisy in the dynamic between nation states and international technology companies.This is not the first time that a social media company has been criticized for its content policies, and from constituencies with diametrically different views. In 2008, YouTube came under fire from then-senator Joseph Lieberman, who wrote to Google CEO Eric Schmidt, imploring him to ensure that YouTube was enforcing its own community standards against terrorism.Lieberman posited that YouTube’s removal of terrorist content from their platform would be a “singularly important contribution to this important national effort.”Read MoreYouTube responded by saying that while their standards did indeed prohibit terrorist content, the company was not able to monitor every account and relied on a system of commercial content moderation that included users reporting things that seemed to run afoul of the rules and workers making quick decisions about where, exactly, to draw the line.Not long after that, the senator began appealing to the company to remove content purportedly coming from none other than the Afghan Taliban.

“Facebook could easily end up removing critical speech against the Taliban from its platform — silencing the very people that it purports to protect”

Jillian C. York

Facebook is responsible for making determinations about the speech of more than a billion of the world’s citizens. While legally, as an American company, it has the right to restrict any type of expression that it sees fit, Facebook’s CEO Mark Zuckerberg has for many years promoted the platform as a means of making the world “more open and connected” and famously said in a 2019 speech that “giving everyone a voice empowers the powerless and pushes society to be better over time.”But Zuckerberg’s actions don’t always match up to his words, and his ideas about free expression seem to come more from his own cultural values than any sort of international standards. Facebook famously promotes double standards when it comes to the human body, and has come under fire for removing comments that some argue constitute clapping back at harassers. But when it comes to adjudicating terrorist and extremist content, the company’s actions often have even more severe consequences.Facebook uses some combination of human moderation and machine learning technology to remove the speech of groups that run afoul of its ban on “dangerous organizations” but in doing so, often removes artistic content, satire, and documentation of human rights abuses. Even more troublingly, the company has been accused of removing counterspeech against terrorist groups—speech that comes from some of the most local and vulnerable communities.It’s not hard to see how, in this current scenario, Facebook could easily end up removing critical speech against the Taliban from its platform — silencing the very people that it purports to protect.

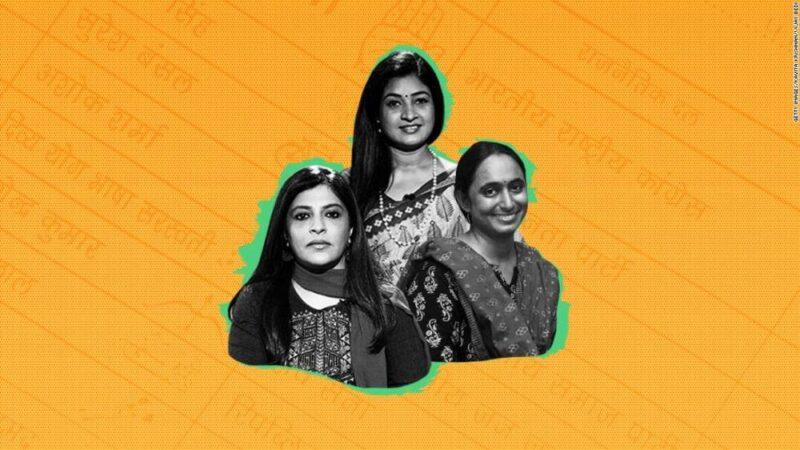

Troll armies, 'deepfake' porn videos and violent threats. How Twitter became so toxic for India's women politiciansFacebook’s own independent Oversight Board, established in late 2020, has expressed concern about the manner in which Facebook defines terrorism and applies its policy against “dangerous groups.” Echoing civil society demands, the Board has stated that Facebook should be transparent about the regulatory frameworks that underpin the policy and how automation is used to moderate content in this category. It has also repeatedly urged the company to ensure that users whose content is wrongfully removed have the opportunity to appeal decisions.When it comes to the Taliban, which is sanctioned by the Treasury Department, Facebook is in a tricky position—the company could potentially face substantial financial and legal penalties for hosting its members’ speech. Nevertheless, Facebook must enact the Board’s baseline recommendations for transparency and accountability.Beyond the legal quandary facing the company, however, is a bigger question: When a group that some or even much of the world deems a terrorist organization takes power over the governance of a nation-state, who should have the ultimate say in whether that group has equal access to the world’s most popular platform?The United States has long exerted military and diplomatic power over other nations’ politics, but today, American companies like Facebook have the power not only to silence foreign leaders, but domestic ones too—as we saw in January when Facebook booted Donald Trump from its platform. The idea that an unelected “leader” like Mark Zuckerberg should hold that much power should worry all of us.This is why it is imperative that Facebook—and every other speech platform—listen to their users and global civil society and enact measures that ensure their decisions are transparent and accountable to users and the public.Read more from the As Equals series*Header image caption: Taliban fighters take control of Afghan presidential palace after the Afghan President Ashraf Ghani fled the country, in Kabul, Afghanistan on August 15, 2021.

Source: edition.cnn.com