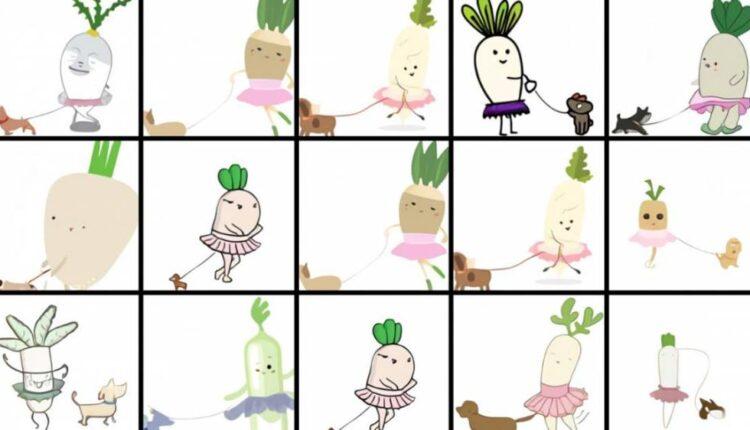

(CNN Business)An artist can draw a baby daikon radish wearing a tutu and walking a dog, even if they’ve never seen one before. But this kind of visual mashup has long been a trickier task for computers.

Now, a new artificial-intelligence model can create such images with clarity — and cuteness.This week nonprofit research company OpenAI released DALL-E, which can generate a slew of impressive-looking, often surrealistic images from written prompts such as “an armchair in the shape of an avocado” or “a painting of a capybara sitting in a field at sunrise.” (And yes, the name DALL-E is a portmanteau referencing surrealist artist Salvador Dalí and animated sci-fi film “WALL-E.”)

A new AI model from OpenAI, DALL-E, can create pictures from the text prompt “an illustration of a baby daikon radish in a tutu walking a dog”.While AI has been used for years to generate images from text, it tends to produce blobby, pixelated images with limited resemblance to actual or imaginary subjects; this one from the Allen Institute for Artificial Intelligence gives a sense for the recent state of the art. However, many of the DALL-E creations shown off by OpenAI in a blog post look crisp and clear, and range from the complicated-yet-adorable (the aforementioned radish and dog; claymation-style foxes; armchairs that look like halved avocados, complete with pit pillows) to fairly photorealistic (visions of San Francisco’s Golden Gate Bridge or Palace of Fine Arts).The model is a step toward AI that is well-versed in both text and images, said Ilya Sutskever, a cofounder of OpenAI and its chief scientist. And it hints at a future when AI may be able to follow more complicated instructions for some applications — such as photo editing or creating concepts for new furniture or other objects — while raising questions about what it means for a computer to take on art and design tasks traditionally completed by humans.Read More

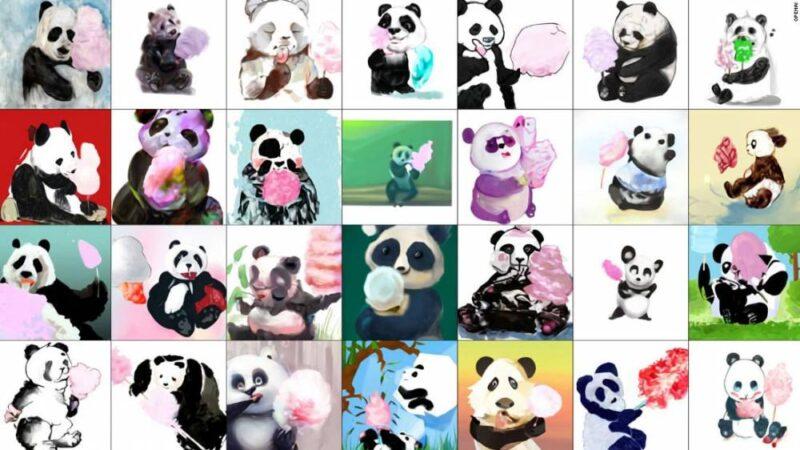

An armchair in the shape of an avocado

DALL-E is a version of an existing AI model from OpenAI called GPT-3, which was released last year to much fanfare. GPT-3 was trained on the text from billions of webpages so that it would be adept at responding to written prompts by generating everything from news articles to recipes to poetry. By comparison, DALL-E was trained on pairs of images and related text in such a way that it appears able to respond to written prompts with images that can be surprisingly similar to what a person might imagine; OpenAI then uses another new AI model, CLIP, to determine which results are the best. (CNN Business was not able to experiment with the AI independently.)Aditya Ramesh, who led the creation of DALL-E, said he was surprised by its ability to take two unrelated concepts and blend them into what appear to be functional objects, such as avocado-shaped chairs, and to add human-like body parts (a mustache, for instance) to inanimate objects such as vegetables in a spot that makes sense.OpenAI, which was cofounded by Elon Musk and counts Microsoft as one of its backers, has not yet determined how or when it will release the model. For now, the only way you can try it is by editing prompts on the DALL-E blog post by choosing different words to complete them from drop-down lists: For instance, the prompt for “an armchair in the shape of an avocado” can be changed to “a clock in the form of a Rubik’s cube.” Even within those limits, however, there are plenty of ways to manipulate the prompts to see what DALL-E will produce, whether that’s a rather ’80s-style cube clock, a cross-section view of a human head, or a tattoo of a magenta artichoke.Mark Riedl, an associate professor at the Georgia Institute of Technology who studies human-centered AI, said the images produced by the model appear “really coherent.” Despite the fact that he can’t access DALL-E directly, it is clear from the demo that the AI understands certain concepts and how to blend them visually. “You can see it understands vegetables, it understands tutus, it understands how to put a tutu on a vegetable,” he said, noting that he’d probably place a tutu on a vegetable in a similar fashion.

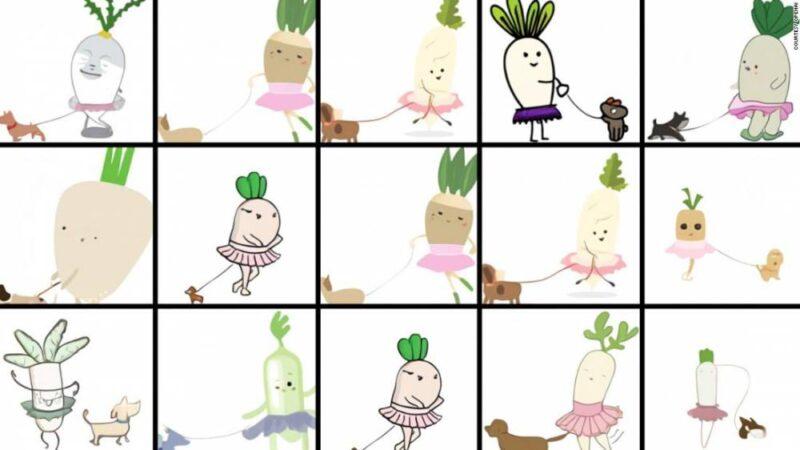

A flamingo playing tennis with a cat

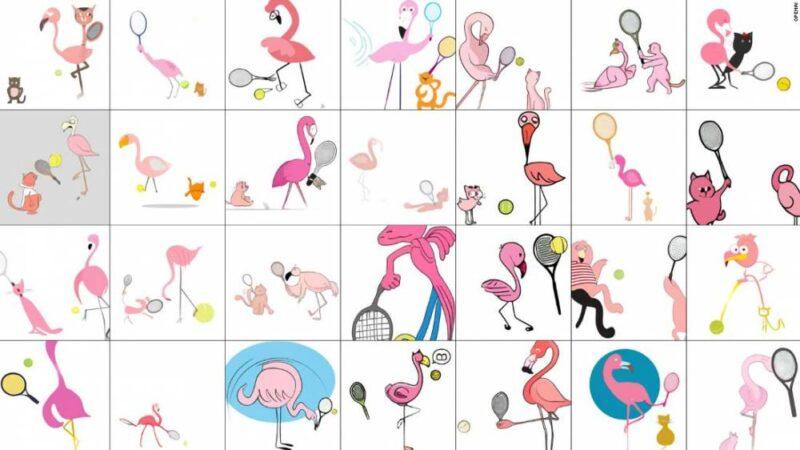

OpenAI did allow CNN Business to send in several original prompts that were run through the model. They were: “A photo of a boat with the words ‘happy birthday’ written on it”; “A painting of a panda eating cotton candy”; “A photo of “the Empire State Building at sunset” and “An illustration of a flamingo playing tennis with a cat.”

DALL-E has a harder time with more complicated prompts; this one requested “an illustration of a flamingo playing tennis with a cat”.The resulting images seemed to reflect strengths and weaknesses of DALL-E, with pandas that appeared to calmly munch on cotton candy and computerized visualizations of a sort-of Empire State Building as the sun set. It turns out that it’s hard for the model to write longer words or phrases on objects (and perhaps it wasn’t extensively trained on images of boats), so the boats it depicted looked a bit weird and just one of the results we received had a very clear “happy birthday.” It’s also difficult for DALL-E to deliver clear results for prompts that include lots of objects. As a result, many of the flamingo-playing-tennis-with-a-cat images looked a bit, well, strange.”While it’s successful at some things, it’s also kind of brittle at some things,” Ramesh explained.

These pandas eating cotton candy were produced by an AI model named DALL-E.

Riedl, too, tried to test DALL-E by editing one of the prompts to something he expected it wouldn’t have much training data on: a shrimp wearing pajamas, flying a kite. That combination led to images that were fuzzier and more blob-like than those of the radish in the tutu walking a dog.Perhaps that’s because the more well-trodden a concept is in the dataset — which was pulled from what’s on the internet — the more “comfortable” an AI model will be at playing around with it, he said. Which is to say that what really surprised him is how many pictures of cartoon vegetables there must be online.

Source: edition.cnn.com